Touch is an important channel of emotional and social communication. It shapes development, interpersonal bonding, and the interpretation of others’ intentions. Increasing evidence shows that humans can extract rich sensory and affective information simply by observing touch. Earlier research has focused on somatosensory mirroring, which suggests that observers internally stimulate the tactile sensations of others. However, the visual system alone may encode much of the sensory and emotional information that is needed to understand observed touch.

This study aimed to investigate how the brain processes different aspects of visually perceived touch, like sensory features, body cues, and emotional affective dimensions, and to determine when these representations emerge in the EEG signal, with neural frequencies transmitting this information.

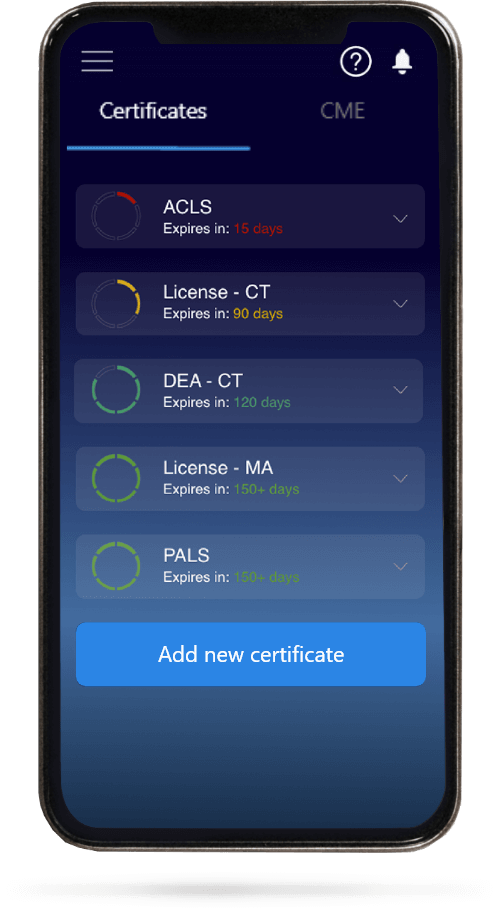

This study used 90 short videos from the Validated Touch-Video Database, demonstrating different hand-based touch interactions. The author created 600-ms clips that showed in four orientations. A separate group of participants rated these modified videos to confirm that key perceptual properties like valence, pain, threat, and arousal were matched with the original dataset. Eighty participants viewed randomized rapid sequences of videos while performing an attention task for the EEG experiment. EEG data were recorded from 64 channels, pre-processed, segmented, and analyzed using time-resolved multivariate decoding and frequency domain decoding. The analyses predicted continuous emotional rating by ridge regression and decoded categorical information like hand orientation, perspective, material, object type, touch type, and skin vs object contacts using regularized LDA. Visual confounds such as entropy, luminance, and AlexNet features were regressed out to ensure that decoding reflected touch-related information rather than low-level visual differences.

Time-resolved decoding showed that body cues, including left-right hand and self-other perspective, were extracted extremely fast, with reliable decoding beginning around 60 ms and increasing by 120-130 ms. This indicates that the visual system rapidly identifies the body part involved and the viewpoint. Sensory characteristics were also represented early. Object type and material were decodable by 110 to 120 ms. Skin vs object contact was decodable by 140 ms and touch type, like pressing vs stroking, by 165 ms, with information initially emerging over posterior visual electrodes and later spreading to frontal, central, and temporal regions. Emotionally affective dimensions showed a mixed temporal profile.

Valence was present early, at around 130 ms, pain around 135 ms (reliable from 240 ms), threat at 230 ms, and arousal at 260 ms, peaking between 300–400 ms. These later dimensions required more processing time, with scalp distributions indicating strong visual involvement, suggesting that even complex evaluations of intensity or harm are rooted in visual pathways.

Frequency domain decoding demonstrated that body-related cues were encoded in a broad spectral range, strongest in theta, alpha, and low beta bands, 6 to 20 Hz. Sensory and emotional features were primarily represented in lower frequency delta, theta, and alpha bands (1 to 13 Hz). It is consistent with the known role of slow oscillations in emotion processing, tactile imagery, and multisensory integration. Emotion-related features showed weaker but reliable frequency signatures, specifically for valence.

These results show that most aspects of observed touch, structural, sensory, and emotional, are encoded rapidly within the first 150 to 2000 ms of visual processing. It relies on an oscillatory mechanism linked with integrative perceptual and affective functions. This study shows that the visual system plays a central role in interpreting touch, rapidly extracting body information, material properties, and emotional meaning before extensive sensory motor or cognitive processes. These findings clarify how the brain integrates visual, sensory, and emotional cues during touch perception and have implications for understanding vicarious touch experiences, individual differences in empathy, and applications such as multisensory prosthetic design and visual tactile interfaces.

Reference: Smit S, Ramírez-Haro A, Quek GL, Varlet M, Moerel D, Grootswagers T. Rapid visual engagement in neural processing of detailed touch interactions. Imaging Neuroscience. 2025;3:IMAG.a.1017. doi:10.1162/IMAG.a.1017